SORA: OpenAI's Text-to-Video Revolution

-- Generative AI has the potential to unlock creativity in everyone.

OpenAI's recent unveiling of SORA, their groundbreaking text-to-video AI, has sent shockwaves through the community. SORA represents a significant leap forward in AI technology, allowing users to transform text prompts into stunning videos seamlessly.

What is Sora?

First, let's examine the videos it generates (all videos are from OpenAI’s website). Given this prompt: A stylish woman walks down a Tokyo street filled with warm glowing neon and animated city signage. She wears a black leather jacket, a long red dress, and black boots, and carries a black purse. She wears sunglasses and red lipstick. She walks confidently and casually. The street is damp and reflective, creating a mirror effect of the colorful lights. Many pedestrians walk about, and it produces this movie:

Absolutely remarkable, isn't it? Just a one-minute video! It's truly groundbreaking. Look at the reflection, the crowd, and the camera motion! This is poised to revolutionize the process of professional video production. With this technology, any individuals with creative visions will be empowered to craft professional-quality short videos, a feat previously beyond reach.

OpenAI has opened the doors to a wide array of visual artists, designers, and filmmakers, inviting their invaluable feedback to hone the model further and cater to creative professionals' needs. With its remarkable capabilities and growing accessibility, SORA has quickly earned comparisons to the video equivalent of ChatGPT, further solidifying OpenAI's position at the forefront of AI innovation.

According to OpenAI, Sora demonstrates proficiency in generating intricate scenes featuring multiple characters, precise movement patterns, and faithful representations of subjects and backgrounds. The model adeptly interprets user prompts and comprehends the spatial dynamics within the physical world, enabling it to fulfill user requests with precision and realism.

Prompt: Historical footage of California during the gold rush.

This shows that Sora has a high degree of understanding and generation capabilities, and can capture and present complex dynamics and visual details in video creation, providing users with a highly realistic and detailed visual experience.

OpenAI says the Sora model deeply understands language, allowing it to accurately interpret cues and generate engaging characters that express rich emotions. Sora can also create multiple shots within a single generated video, accurately maintaining character and visual style coherence. This ability illustrates Sora's ability to understand and execute complex textual instructions and maintain consistency and coherence in visual presentation, providing viewers with a unified and engaging story experience.

Text Prompt Input: A cat waking up its sleeping owner demanding breakfast. The owner tries to ignore the cat, but the cat tries new tactics and finally the owner pulls out a secret stash of treats from under the pillow to hold the cat off a little longer.

Indeed, OpenAI has acknowledged certain weaknesses in the current iteration of the Sora model. It may struggle with accurately simulating the physics of complex scenes and understanding cause-and-effect relationships in specific contexts. For instance, there may be instances where a person is depicted taking a bite of a cookie, but the resulting visual representation might not accurately reflect the action. Additionally, the model may encounter challenges in discerning spatial details, such as distinguishing between left and right, and accurately describing temporal events, such as following a predetermined camera trajectory. Despite its considerable advancements in video generation and visual content creation, these limitations underscore the ongoing need for refinement in Sora's ability to handle intricate physical interactions and maintain temporal coherence.

Text Prompt Input: Archeologists discover a generic plastic chair in the desert, excavating and dusting it with great care. (Failure case)

SORA Model: Diffusion Transformer (DiT)

Sora’s model: click the tech report for the details.

Sora stands as an innovative diffusion model, poised to revolutionize video generation. Its approach involves commencing with a video resembling static noise and gradually purging it across multiple iterations. This method allows Sora to craft entire videos seamlessly or elongate existing ones precisely. Overcoming the challenge of maintaining continuity even when the subject momentarily disappears is tackled ingeniously by Sora, who peers into multiple frames ahead. Operating on the Transformer architecture akin to the renowned GPT model, Sora boasts commendable scalability.

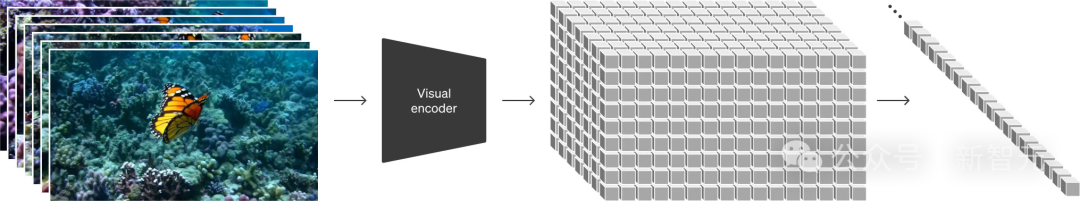

OpenAI adopts a novel strategy by representing videos and images as clusters of smaller data units termed patches, mirroring the token concept in GPT. This unified data representation empowers the training of Diffusion Transformers on a broader spectrum of visual data, encompassing diverse durations, resolutions, and aspect ratios than previously feasible.

Drawing from the success of DALL·E and GPT models, Sora integrates DALL·E 3's re-annotation technology. This approach involves crafting highly descriptive titles for visual training data, enhancing the model's ability to execute textual instructions in generated videos faithfully.

Moreover, Sora transcends mere text-based commands, proficiently transforming static images into meticulously animated videos. Furthermore, it can elongate existing videos or seamlessly fill in missing frames.

OpenAI envisages Sora as a cornerstone for models delving into understanding and simulating the real world, marking a significant stride towards achieving Artificial General Intelligence (AGI).

Conclusion:

SORA represents a significant milestone in AI technology, offering a powerful tool for transforming text prompts into captivating visual narratives. Its innovative approach, drawing from the successes of previous models and leveraging advanced techniques such as diffusion transformers, showcases remarkable capabilities in understanding and generating complex visual content. Despite its current limitations, SORA's potential for further development and refinement is immense, paving the way for more sophisticated and versatile AI models in the future. As OpenAI continues to push the boundaries of innovation and collaboration, SORA stands as a testament to the organization's commitment to advancing AI while ensuring its responsible and ethical use. With its ability to simulate and understand the real world, SORA heralds a promising future for Artificial General Intelligence, promising exciting possibilities for creative expression and storytelling.

OpenAI's Sora is poised to revolutionize the short video and media production industry, marking a significant shift in how content is generated. However, this advancement will also drive the demand for high-end GPUs to support the required intensive AI computations. Consequently, the market for GPUs is expected to experience a significant impact as well, reflecting the evolving landscape of video generation technologies.